The purpose of this study is to describe an economical approach to an existing adaptive localization technique and its implementation in the proper orthogonal decomposition-based ensemble four-dimensional variational assimilation method (PODEn4DVar). Owing to the applications of the sparse processing and EOF decomposition techniques, the computational costs of this proposed sparse flow-adaptive moderation (SFAM) localization scheme are significantly reduced. The effectiveness of PODEn4DVar with SFAM localization is demonstrated by using the Lorenz-96 model in comparison with the Smoothed ENsemble Correlations Raised to a Power (SENCORP) and static localization schemes, separately. The performance of PODEn4DVar with SFAM localization shows a moderate improvement over the schemes with SENCORP and static localization, with low computational costs under the imperfect model.

Data assimilation is an analysis technique in which the observed information is combined with forecasted information to estimate the states of a system. The background error covariance (B) plays an important role in data assimilation system. It is essential for several reasons, such as information spreading, information smoothing, balance properties, the ill-condition of the assimilation, and flow-dependent structure functions. In the standard four-dimensional variational assimilation method (4DVar) (e.g., Lewis and Derber, 1985; Le Dimet and Talagrand, 1986; Courtier and Talagrand, 1987; Courtier et al., 1994), we usually apply the background error covariance modes to produce an homogeneous and isotropic B. This method makes the background error covariance estimation much easier, but it is not always appropriate under some conditions. To improve the background error covariance estimation, using ensemble forecast statistics to produce B is a more attractive approach (e.g., Evensen, 1994). Ensemble-based data assimilation methods (e.g., Evensen, 1994; Qiu et al., 2007; Tian et al., 2008, 2011; Wang et al., 2010; Tian and Xie, 2012; Tian and Feng, 2015) have the ability to evolve flow-dependent estimates of forecast error covariance (i.e., the background error covariance B) by forecasting the statistical characteristics. However, the use of finite ensembles to approximate the error covariance inevitably introduces sampling errors that are seen as spurious correlations over long spatial distances. Such spurious correlations could be ameliorated through the localization process (e.g., Houtekamer and Mitchell, 2001; Hamill et al., 2001).

Localization is a useful strategy for reducing the effect of spurious ensemble correlations caused by small ensembles. Usually, localization acts by constructing a moderation function and representing B as a Schur product of the ensemble correlation function and moderation function. The most common method of constructing the moderation function for reducing the effect of spurious correlations is applying some types of distance-based functions (e.g., Houtekamer and Mitchell, 1998, 2001; Gaspari and Cohn, 1999; Hamill et al., 2001). Studies also show that different localization functions can be used for different observation types (Houtekamer and Mitchell, 2005; Tong and Xue, 2005; Lei and Anderson, 2014), and various state variables (Anderson, 2007, 2012). Although good results have been obtained, for large models, tuning the width of the localization coefficient can be expensive (e.g., Anderson and Lei, 2013). Furthermore, vertical localization has been more challenging, while many large ensemble filter applications have localized observation impact in the horizontal direction.

To address the aforementioned issues, there have been several methods proposed to construct the moderation function in a flow-adaptive way. The hierarchical approach in Anderson (2007) utilizes a Monte Carlo method based on splitting the ensemble into several small ensembles to assess the sampling errors and the spurious correlations. Zhou et al. (2008) introduced a multi-scale tree concept and it worked well, especially when the update calculations have the same structure as the tree. Emerick and Reynolds (2010) believed that the key point of the localization procedure is choosing the critical length(s), based on the model correlation length(s) and the range of the sensitivity matrices. Bishop and Hodyss (2007) proposed the Smoothed ENsemble Correlations Raised to a Power (SENCORP) approach based on the online computation of a low-dependent moderation function that damps long-range and spurious correlations. Numerical assimilation experiments demonstrate that SENCORP moderation functions are superior to non-adaptive moderation functions. Since many matrix products and eigenvector decompositions have to be performed in SENCORP, its computational costs are thus expensive. Furthermore, the SENCORP approach cannot be directly applied in the ensemble-based 4DVar methods (e.g., Tian et al., 2010; Wang et al., 2010) in which the spurious correlations between the model grids and observational sites should be ameliorated, since it can only provide moderation functions over the model grids.

This paper describes an economical approach to implement the SENCORP method and further enable it to be applied in the proper orthogonal decomposition-based ensemble 4DVar (PODEn4DVar) (Tian et al., 2010) through the EOF decomposition and interpolation technique. In section 2, we describe the SENCORP localization scheme and its modification version, named the sparse flow-adaptive moderation (SFAM) localization technique, proposed in this paper, and its implementation in the PODEn4DVar method. In section 3, observing system simulation experiments (OSSEs) are conducted using PODEn4DVar with the static localization, the original SENCORP moderation localization, and the newly proposed SFAM localization schemes, for evaluation of SFAM. Finally, a summary is given in section 4.

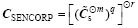

Bishop and Hodyss (2007) proposed the SENCORP algorithm to generate moderation functions from a smoothed covariance function, which damps small correlations when raised to a power. The construction of SENCORP moderation functions requires the following steps:

Step 1: Let X=[x1, x2, …, xK] be n× K ensemble samples, where K is the total sample number and n is the size of the model state vector.

(1)

(1)

The smoothed perturbations

Step 2: The normalized spatiotemporally smoothed ensemble perturbations

The sample correlation matrix Cs of the smoothed ensemble perturbations is thus obtained,

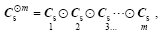

Step 3: Give Cs power m to reduce small spurious correlations.

and

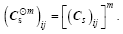

Step 4: The matrix product smoother is obtained by taking the matrix product of

where

Step 5: The SENCORP moderation function is finally given by attenuation of the spurious correlations amplified by going from

where r is the element-wise integer power.

For more details of the above process, please refer to Bishop and Hodyss (2007). The many matrix products and eigenvector decompositions involved in the SENCORP algorithm (i.e., steps 1-5) will certainly result in high computational costs. Furthermore, the SENCORP approach cannot be directly applied in the ensemble-based 4DVar methods (e.g., Tian et al., 2010; Wang et al., 2010) in which the spurious correlations between the model grids and observational sites should be ameliorated, since it can only provide moderation functions over the model grids.

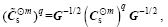

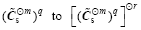

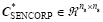

To address the aforementioned issues, we firstly construct the SENCORP matrix CSENCORP on low-resolution (and thus sparse) grids, so that the computational cost is significantly reduced. That is, we firstly implement steps 1-5 to obtain a sparse (i.e., low-resolution)

and

where E contains all of the eigenvectors, λ is a diagonal matrix whose diagonal elements are eigenvalues, and

To implement the SFAM localization scheme in PODEn4DVar, we should further give the moderation correlations between each model grid and the observational locations, which can also be solved by a simple interpolation procedure. Figure 1 is a schematic diagram of such an interpolation procedure. Suppose that the red circle is one observation location, one can easily utilize the local correlation samples over its nearby model grids (for example the green grids) to interpolate the local correlation samples cy, j(j=1, …, l, where l is the number of measurements) for this observational location. Consequently, the moderation element (CSFAM)ij between the ith model grid and the jth measurement is computed by

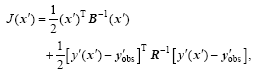

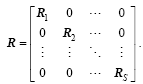

By minimizing the following incremental format of the 4DVar cost function, Eq. (11), one can obtain an optimal increment of the initial condition,

where x’ =x-xb is the perturbation of the background field xb at the initial time t0,

(yk)’ =yk(xb+x’ )-yk(xb), (14)

y’ obs, k=yobs.k-yk(xb), (15)

yk=Hk(Mto?tk(x)), (16)

Here, the superscript T represents a transpose, b denotes the background value, the index k stands for observation time, S is the total observational time steps in the assimilation window, Hk acts as the observation operator, and the matrices B and Rk are the background and observational error covariance, respectively. One should minimize the 4DVar cost function, Eq. (11), to obtain an optimal increment of the initial condition,

With the prepared background field xb, the initial model perturbations

where V is an orthogonal matrix and is derivable from

We mark

and rewrite Eq. (18) as follows:

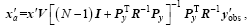

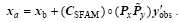

Similar to Tian et al. (2011) and Tian and Xie (2012), the SFAM function is applied to the matrix

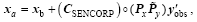

For comparison, we also implement the SENCORP and static localization schemes in to PODEn4DVar, as follows:

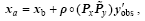

and

where each elementρ i, j (of the modification matrix ρ ) is given by Eqs. (31)-(32) in Tian and Feng (2015).

In this section, the PODEn4DVar approach with the SFAM scheme is evaluated within the model of Lorenz (1996),

with cyclic boundary conditions, x-1=xn-1, xo=xn, and xn+1=x1. One can choose any dimension, n, greater than 4 and obtain chaotic behavior for suitable values of the external forcing F. In this configuration, we take n = 40 and F = 8. Here, F = 8 represents the perfect model to produce the “ true” and “ observational” fields. However, in the following assimilation experiments, we adopt F = 9. For computational stability, a time step of 0.05 units (or the equivalent of 6 h in Lorenz (1996)) is applied. The experimental settings are completely the same as those described in Tian et al. (2011).

The performance of PODEn4DVar with the SFAM scheme is examined in comparison with the SENCORP localization scheme (Bishop and Hodyss, 2007) and the one with static localization (Tian et al., 2011). In the following experiments, we choose a low resolutions (e.g., only retaining one grid every two model grids) to construct the SFAM modification function to exploit an appropriate resolution. Also, we test the parameter sensitivity contained in the SFAM modification function, which is the function of m, q, r, and the smoothing parameter ds. The default number of observations is equal to 20 (equally spaced at every observation time). Observations are taken every two steps (or 12 h), generated by adding random error perturbations of 0.03 to the time series of the true state (F = 8). We consider an assimilation window length of 4 (standard 24-h daily assimilation cycle). The default parameter setups for the static localization scheme (only one direction in the Lorenz-96 model) are: ensemble size N = 60 and Schur radiusr0=4.

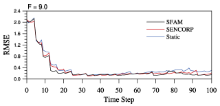

Figure 2 compares the performance of PODEn4DVar with the SFAM, SENCORP, and static localization techniques under the imperfect-model assumption (F = 9). It shows that all three techniques behave very well in terms of overall low root-mean-square error (RMSE). SFAM performs slightly better than the other techniques, especially in the first 20 time steps. The time steps are followed by their almost identical performance until the end of the whole assimilation process. The performance of SENCORP is similar to the static scheme. The performance of SFAM is superior to SENCORP, which is likely due to the application of EOF decomposition in SFAM. EOF decomposition can capture the main spatial correlation information of state variables and disregards some of the Gaussian white noise for better moderation.

To examine the sensitivity of the PODEn4DVar assimilation to the variations of the parameters of the SFAM localization technique, we design four groups of experiments. Figure 3a shows that the smoothing parameter ds has slight impacts on the PODEn4DVar assimilation under the imperfect-model assumption. The performance of ds =16 is superior to any of the others. Figure 3b shows parameter m also has slight impacts on the PODEn4DVar assimilation under the imperfect-model assumption. Generally, we choose m= 2. Figure 3c shows the performance of the PODEn4DVar assimilation is sensitive to the variations of parameter q. When q= 2, the performance of the PODEn4DVar assimilation is stable and the RMSEs are the lowest. Figure 3d demonstrates that parameter r has slight impacts on the PODEn4DVar assimilation under the imperfect-model assumption. Parameter m has the same function as r; we also choose r = 2.

| Figure 3 Time steps of RMSEs for different parameters of SFAM with the Lorenz-96 model with model error F = 9: (a) smoothing parameter ds; (b) m; (c) q; (d) r. |

For the two groups of experiments, the ratio of the computational costs for the three (i.e., SFAM, SENCORP, and static) localization schemes is about 1:1.5:2.3. Thus, the SFAM scheme is efficient due to its sparse processing and EOF decomposition techniques.

This study has proposed the SFAM localization technique and implemented it in PODEn4DVar. The robustness and potential merits of the implementation of PODEn4DVar with SFAM localization have been demonstrated by using the Lorenz-96 model. For comparison, the implementation of PODEn4DVar with the SENCORP localization and static localization have also been tested by using the Lorenz-96 model. The assimilation results imply that, to a certain extent, PODEn4DVar with SFAM localization outperforms PODEn4DVar with the SENCORP and static localization schemes. The computational costs of PODEn4DVar with SFAM localization are significantly more efficient than PODEn4DVar with SFAM localization due to its sparse processing and EOF decomposition techniques.

| 1 |

|

| 2 |

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| 7 |

|

| 8 |

|

| 9 |

|

| 10 |

|

| 11 |

|

| 12 |

|

| 13 |

|

| 14 |

|

| 15 |

|

| 16 |

|

| 17 |

|

| 18 |

|

| 19 |

|

| 20 |

|

| 21 |

|

| 22 |

|

| 23 |

|

| 24 |

|

| 25 |

|

| 26 |

|